For years, your PostGIS database has been your faithful companion, guardian of your geographic data, diligently responding to your most convoluted requests. And yet, despite these good and loyal services, so is the logic of the world: we must always move, evolve, upgrade! It is then time to upgrade this old cluster too.

This is when the problems begin: between the breaking changes of the major versions of PostgreSQL, the deprecated functions of PostGIS which end up being removed and the size of this old cluster which has grown large over the years, the operation may not be simple! This article will detail the upgrade procedure and will evoke some points of vigilance to keep in mind to migrate with serenity.

We will only talk about major version migrations. Indeed, for minor versions of PostgreSQL, there is nothing to do and for PostGIS just use:

Backups are still recommended, but they are done on a regular basis anyway, right?

Secure your migration

A successful migration is a secured one. You need 3 main points for that:

- ensure data sustainability

- plan and minimize the db downtime

- manage the human factor, by communicating clearly about this dowtime and the whole migration process.

This last point may seem obvious, but I think it’s important to point it out nonetheless. Doing this correctly often determines the feeling of success or failure of involved or affected person, thus the future capability of your organisation to absorb this kind of change. Past experience – bad or good – can have a lot of influence on the way we’re able to move forward as a human group. We won’t say more on this big subject (it would take an entire book). It’s up to you to involve the relevant person of your organisation in the right way!

Now, let’s go through the technical points.

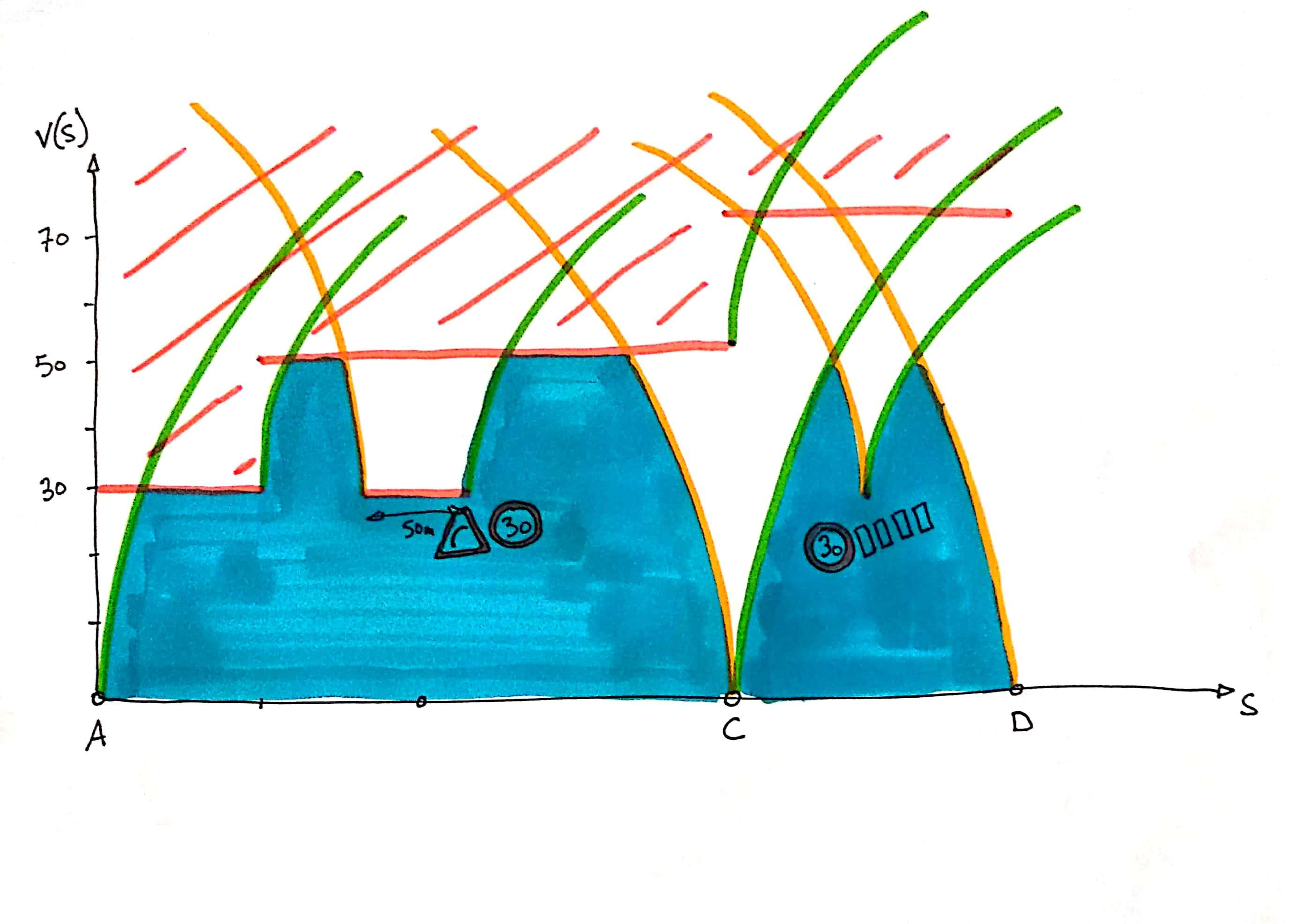

Preview of a migration process

Generalities

To migrate while keeping data safe, you need to test, test again and then test some more your upgrade procedure. You need to test it on a copy of your cluster. You can use pg_basebackup to make such a copy if you’re planning to do an inplace upgrade.

Document your procedure and measure the time it takes to plan the downtime. Indeed, from the moment the migration has started (when you backup basically), write operations on the cluster will have to be stopped. However, it will often be possible to keep on reading data.

Finally, remember to establish a list of tests to perform once the migration is done, to ensure that it is successful (for instance, you can write a test for each view you’ve modified on the old cluster during the test phase).

Simple case: cluster without PostGIS

Migrating a cluster without PostGIS is often quite simple: we – always – check the changelog, we correct what affects us, a blow of pg_upgrade and it’s over.

We still retest our views, because recent versions of PostgreSQL are more strict on the typing of queries (*). Also, some meta-schema tables or views may change, be sure to check if you’re relying on it. There are other small breaking changes, but ultimately they are all pretty easy to fix.

With PostGIS (and other extensions, especially the one using external binaries), you have to be a little more careful.

Simple case: same PostGIS version

If you stay on the same versions of PostGIS, then the migration process will be as follows:

- install the packages of the new version of PostgreSQL and those of PostGIS that correspond to this version of PostgreSQL (or compile the sources for example)

- use pg_upgrade (or pg_upgradecluster under debian / ubuntu) to migrate the cluster. It may be wise to block the port temporarily, as pg_upgrade will use this port for the new cluster (the old cluster will be on the next free port), you will need to do some data checking before opening the new cluster to users.

More complicated case: different version of PostGIS

There, we will have to go through a pg_dump / pg_restore, the old way.

For the sake of completeness, be aware that some people attempt high-flying operations using symbolic links in the PostgreSQL lib directory. I do not recommend it (**): you will have no feedback if some of your views are invalid because they use deleted functions for example. On the contrary, pg_restore will refuse to restore the views in question which will allow you to correct them immediately.

It will not be possible here to detail the process precisely because it will depend on many factors. However, in general terms:

- when we migrate a cluster, we migrate the databases with pg_dump, but also the global objects (roles, global permissions, etc …) with pg_dumpall –globals-only. pg_dumpall is also capable of backing up databases (like pg_dump), but it can’t do it in custom format (pg_dump‘s -Fc option), which for me is a big loss of flexibility in our case.

- you must first restore the global objects, then each DB after having created it and activated PostGIS.

- Use the –exit-on-error option of pg_restore. This is essential in order not to miss any errors during the restoration. Alternatively, you can redirect all logs to a file and review it later. But after the first mistake, you will not be sure that the following ones are not simply a consequence of the first one. With exit-on-error, pg_restore stops immediately and forces you to correct your views as you go, which you will have to do anyway.

- Some errors are not important. For example, creating the public schema will likely fail. You can remove this schema before the restore, but it is also possible to selectively exclude statements during restore, with the list function of pg_restore:

- then it is necessary to iterate on this process by correcting / updating the source database until the restoration passes!

Among the incompatibilities that you may encounter:

- Those related to PostgreSQL changes (see the section on migration without postgis).

- replace PostGIS functions that have been renamed or removed.

It’s too long, what to do?

I have already encountered bases so large that the downtime required to migrate seemed unmanageable.

We are talking about bases larger than 100GB and whose restoration exceeded one week! There, normally, the seasoned sysDBA that you are must say to yourself: “but wait, even for 200GB it shouldn’t take a week, right?”.

Absolutely ! In all of the very long restore cases that I have encountered, it was actually the refresh of materialized views, which some users seem to like a lot, that was endless.

If you think you are concerned, think about cleaning up your old views (“is this view which takes 8 days to refresh really critical for my business?”). Or take a little time to optimize them (“Look at this, a view which depends on this other view which itself depends on another … and none has a geographic index!”).

But sometimes it is not that simple and above all, the one who migrates is not necessarily the one who created these views, which can make the work very tedious or even impossible in the short term. In this case, consider using the archive list system described above (pg_restore –list and –use-list options with the custom archive format of pg_dump). By excluding all lines containing “MATERIALIZED VIEW DATA”, you will be able to restore your archive without refreshing these views, and do so later.

For this refresh, you can take inspiration from these requests for exploring the view dependency graph. It is even possible to restore only part of it, if you select the parts of the dependency tree that match your need! It’s a bit technical but very effective, trust me 🙂

Conclusion

Migrating an old PostgreSQL cluster with PostGIS is no easy task. It will require taking the time to test and update the old database, which is long and tedious. If you can, it is always better to do this regularly, as this decreases both the time and risk, and generally makes the process less difficult.

If you already have several versions overdue, don’t panic! The tools at your disposal in the PostgreSQL universe will allow you to achieve your goals, at the cost of a little effort.

Some tips to make future migrations easier:

- Migrate regularly

- Clean regularly your database

- Make regular backups

- Add unit tests for critical data and functions (why not with pgtap if you are postgres-centric?)

- Give in to the temptation of the materialized view only as a last resort! Before that, optimize your views by checking their execution plan, set indexes, review the queries and possibly your model, etc. Keep it Simple, Stupid!

- When you can, having a pre-production or acceptance environment to test the proper functioning of the applications using your database is a real plus.

(*) I did not find a reference to this breaking change in the changelogs, but I have often noticed it on upgrades from versions <10.

(**) That being said, if you like to gamble and are sure of yourself, don’t hesitate to give it a try and get back to me! I tried, it might work, but it’s ultimately less practical than the pg_dump / pg_restore in my opinion.